Reborn: Robotics for the People, by the People

How Reborn is building the data engine, model layer, and infrastructure stack to power the next generation of intelligent, general-purpose robots.

We’re entering the era where AI isn’t just thinking, it’s moving. Robotics is no longer science fiction or a distant industrial tool. It’s the next frontier of artificial intelligence, and Reborn is on a mission to ensure that the future is owned and shaped by the people, not just powerful corporations.

As someone who’s been in love with robotics since my student days, competing with hand-built, RC car-sized bots that nervously rolled through obstacle tracks, I’ve seen firsthand how magical it is when code meets the real world. Back then, every turn, every move was pre-programmed, with no margin for error. You’d hold your breath hoping the robot followed your logic all the way to the finish.

Today, things are different. Smarter. More collaborative. And Reborn is part of that shift: opening access to robotic intelligence, rewarding contribution, and bringing the physical AI revolution into the hands of its community.

But building this future isn’t easy.

For all the excitement around robotics, progress is still held back by deep, structural challenges. The robots we dream of: machines that move through the world, learn from it, and help us in meaningful ways, aren’t just a matter of hardware. They require a new kind of intelligence, powered by the right data, the right models, and the right infrastructure to tie it all together.

That’s where Reborn steps in.

What Reborn Is Solving

Reborn is tackling three of the biggest roadblocks holding back the future of general-purpose robotics: the data gap, the model gap, and the embodiment gap.

Let’s break that down.

First up: the data gap. Training intelligent, adaptable robots requires massive amounts of high-quality motion data. Data that’s diverse, real-world, and multimodal. Right now, that doesn’t exist at the scale we need. The largest real-world robotics dataset is just over 2.4 million samples, and most of it comes from expensive, small-batch research efforts. Simulators can help, but they still struggle to replicate the complexity of physical environments.

Then there’s the model gap. Even with advances in imitation learning and vision-language-action models, today’s embodied AI is still brittle. The models are often overfitted, struggle in unfamiliar settings, and are tightly coupled to specific tasks or platforms. Without a serious step-up in training signals, general-purpose AI for robots won’t scale.

Finally: the embodiment gap. Real-world robots aren’t all built the same. They vary wildly in hardware, movement, and control. That makes it hard to apply one model across multiple systems. Companies end up building their own datasets, tuning their own models, and reinventing the wheel, every single time. The result? High costs, slow iteration, and zero compounding progress across the ecosystem.

Reborn’s insight is simple but powerful: if we want general-purpose robotics, we need shared infrastructure. One that bridges intelligence, data, and embodiment in a unified pipeline. That’s what they’re building.

But solving these three gaps requires more than just more data or better hardware. It demands a new kind of model, one that can learn from diverse sources, adapt across platforms, and improve continuously. That brings us to one of the most important building blocks of Reborn’s approach: Robotic Foundation Models (RFMs).

Why Robotic Foundation Models Matter

RFMs are the key to building robots that aren’t limited to a single task or fixed environment. Instead of programming each robot from scratch, RFMs are large-scale, pre-trained AI models designed to help robots understand the world, make decisions, and act, all through a single, unified framework.

Think of it this way: traditional robots follow rigid instructions and often require extensive tuning to handle new tasks or hardware. RFMs change the game by integrating perception (seeing), planning (deciding), and control (acting) into one cohesive system. This allows a robot to adapt on the fly, even across different physical forms or “embodiments.”

In other words, an RFM trained in one setting can be used across multiple robot types with minimal adjustment. This is what makes them so powerful: cross-embodiment generalization. It means less time building one-off solutions, and more time scaling intelligent robots that can actually function in messy, real-world environments.

The payoff? Robots that are smarter, more flexible, and more intuitive to interact with, capable of understanding language, interpreting non-verbal cues, and handling dynamic tasks without needing to be rebuilt from scratch every time.

Reborn’s mission is to make these powerful models not only possible, but accessible. Let’s break down how.

Let’s Get Real. So… How Does Reborn Actually Do It?

The road to general-purpose robotics is blocked by more than just technical hurdles, it’s bottlenecked by data scarcity, fragmented models, and incompatible hardware. Reborn isn’t trying to brute-force a solution from the top down. Instead, it’s flipping the equation: start with people, start with data, and scale from the ground up.

At the heart of Reborn’s strategy is a bold but refreshingly practical idea: build a real-world learning engine powered by everyday human behavior. Forget academic datasets and lab-controlled conditions, this is about making robots learn from how we move, act, and interact in the wild.

Rather than rushing to train an all-knowing AGI, Reborn is taking a progressive, data-first path. It begins with crowd-powered collection and leads toward robust, adaptable robotic intelligence that performs in real environments, not just controlled demos.

Source: Reborn whitepaper

To make this possible, Reborn has designed a multimodal data engine, a holistic framework that captures human behavior across both physical and virtual contexts. It’s not just motion tracking. It’s a system for turning human motion into fuel for Robotic Foundation Models that can actually generalize across tasks and embodiments.

Here’s how they’re doing it:

1. Embodied Vlog

Reborn’s Embodied Vlog (REV) is a mobile app that turns everyday life into training material. Users record and upload first-person videos of real-world tasks, things like cooking, cleaning, fixing objects, or using tools.

Source: Reborn whitepaper

These videos provide rich, fine-grained demonstrations of human behavior from a first-person perspective. Robots trained on this data learn to understand how humans move, manipulate objects, and complete complex tasks using visual and language cues.

Each upload adds to a growing, community-built dataset that helps robots better understand human intent and execution, making them more capable and intuitive in real-world interactions.

And when you’ve covered the real world, what’s next? The virtual one.

2. Mocap Life

To capture full-body movement at scale, Reborn created Rebocap™, a low-cost wearable motion capture system. No studio required, just strap it on at home, at work, or even in VR.

Source: Reborn whitepaper

Using a network of IMUs (inertial measurement units), Rebocap captures high-resolution joint and body movement in real time. This data is then used to train humanoid robots to walk, lift, bend, and move like real people, not stiff machines.

With prices starting at $200, Rebocap is democratizing motion capture, giving creators and contributors access to the kind of high-fidelity data that used to cost thousands. It’s how Reborn makes large-scale, human-grounded data collection possible, without breaking the bank.

And for users who want to help train robots through play? There’s a solution for that too.

3. VR Gaming

Reborn VR Gaming turns fun gameplay into training data. Think Apple Vision Pro meets training dojo.

As players interact in VR: stacking, sorting, building, reaching, every hand gesture and object interaction becomes a high-quality data point. These moments are captured with precision, creating detailed motion datasets that go far beyond what traditional video or basic mocap can provide.

What’s more, the experience is actually fun. Users engage in dynamic challenges that feel like games but generate ergonomic, high-signal data for training robotic agents. It’s where entertainment and robotics research meet, and both sides win.

From everyday actions to immersive virtual tasks, Reborn now needed a layer that could tie it all together at scale.

4. Roboverse Simulation

Meet Reboverse, Reborn’s high-fidelity sim engine built on the proven Roboverse framework. It aggregates 25+ top-tier simulation environments, like NVIDIA’s Isaac Sim, Google’s MuJoCo, and SAPIEN, into a single interface.

This platform enables researchers and contributors to generate synthetic training data across diverse tasks, robot types, and sensor inputs. It’s physics-accurate, modular, and built to help models make the leap from simulation to the real world.

By closing the notorious sim-to-real gap, Reboverse accelerates pretraining, enabling faster iteration with lower risk. It even supports teleoperation: users can control virtual robots using their phones or keyboards like game controllers, generating high-quality training data through interactive, gamified tasks.

5. Tapping New Frontiers of Human Data

Building on this momentum, Reborn is expanding into new sensing methods, starting with smart gloves that capture tactile feedback such as pressure, texture, and grip. These rare data signals are critical for teaching robots fine motor control and human-like dexterity.

Combined with Rebocap and VR gameplay data, this makes Reborn a pioneer in high-fidelity, multimodal data collection, laying the foundation for more capable, human-aware robotic intelligence.

Building the Stack That Trains the Future

Reborn is turning messy human motion into the foundation of robotic intelligence: one demo, one interaction, one dataset at a time. But they’re not stopping at collection.

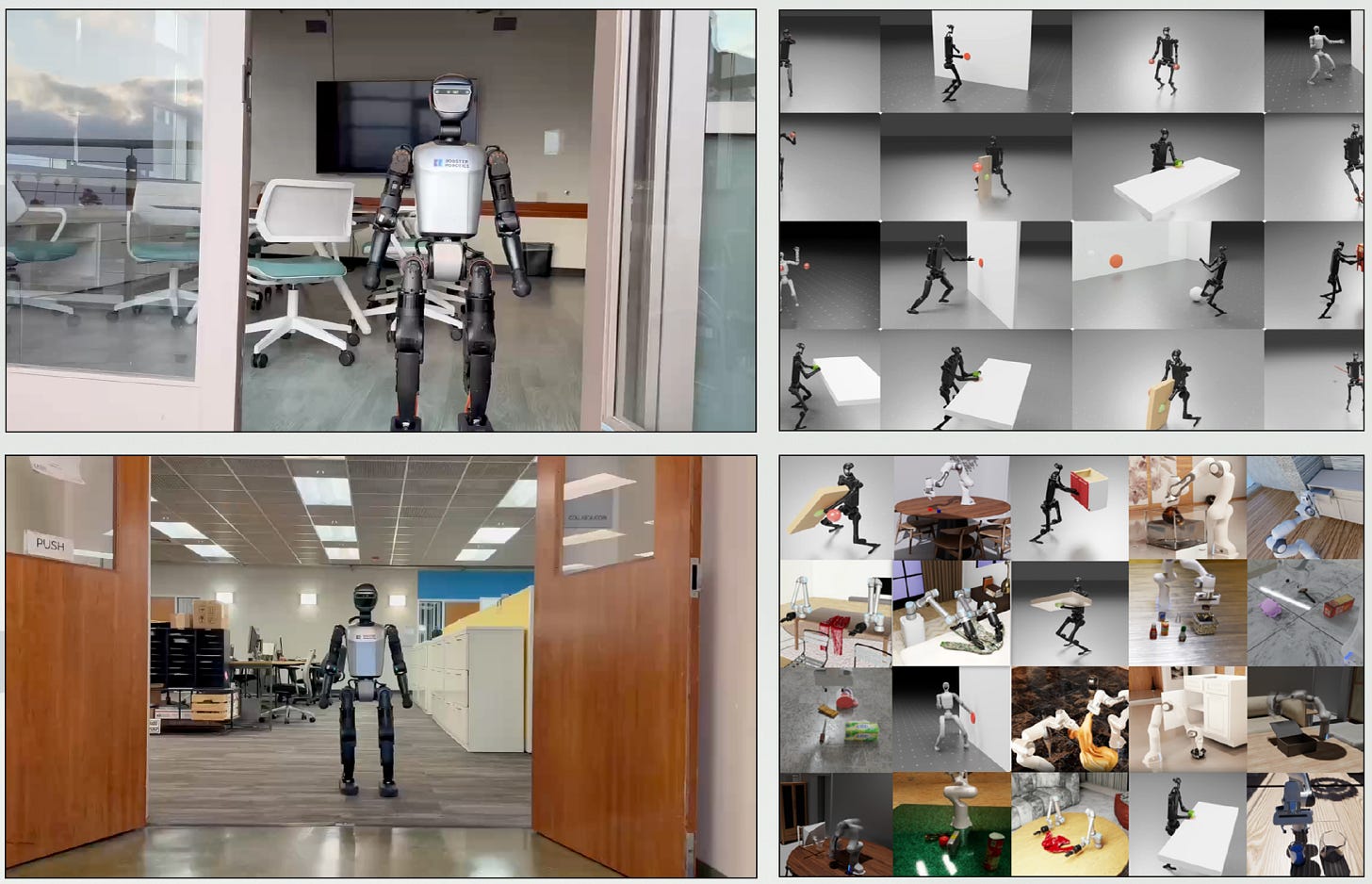

Reborn is now training its own Robotic Foundation Models (RFMs), drawing from motion capture, simulation, and real-world demonstrations. These models are built for generalization, and early partners like Unitree and Booster Robotics are already integrating them into live products.

What makes this powerful is motion retargeting. Using metadata in Reboverse, a single recorded episode can be applied to multiple robot form factors, decoupling model training from specific hardware and unlocking instant scalability.

Source: Reborn whitepaper

Rather than serving one customer at a time, Reborn is building models that generalize across embodiments, and fine-tune down to specific use cases.

This isn’t a closed system either. Reborn plans to open-source its RFMs and let researchers, hobbyists, and startups train their own models using its platform. It’s a decentralized loop where shared data strengthens shared intelligence.

The full-stack is coming together:

Hardware (Rebocap, gloves)

Simulation (Reboverse)

Data Collection (phone telerop, VR, real-world demos)

Foundation Model Training (RFMs)

Deployment (via customers like Unitree)

From solo tinkerers to robotics labs, Reborn is laying the rails for a more inclusive robotics future, one where anyone can help shape machines that think, feel, and move.

Reborn Is Building What Comes After the Robots Wake Up

Everyone’s been looking at text and pixels. Reborn is looking at the world: the messy, physical, embodied world, and turning it into something programmable. This isn’t robotics as spectacle or hype. It’s infrastructure, built for builders.

If you're paying attention, the signal is clear: the robots aren’t coming. They’re already here. And Reborn is helping them learn.