Teaching Robots to Hear: Inside Silencio’s Microphone Layer for the Machine World

Introduction: The Missing Sense in Machines

Would you rather be blind or deaf?

Neither, of course. To lose one sense is to lose a dimension of reality. A blind person learns to listen: to read the world through sound, texture, and rhythm. A deaf person sees everything but misses the invisible meaning carried in tone and echo. Vision gives us shape; hearing gives us context. Together, they make the world whole.

We’ve already given robots eyes. Cameras let them see, GPS and lidar let them map and move. But the world isn’t silent, and neither are we. A human knows the sound of laughter from a cry. A robot can’t; at least, not yet.

Forget smartglasses and body cameras. Vision helps robots see, but sound helps them understand. A person can make sense of a thick accent, a mumbled phrase, or the change in someone’s tone mid-sentence. A robot hears none of that. Without audio awareness, it can follow movement but miss meaning, able to detect lips moving but never the emotion or intent behind them.

That gap: the absence of hearing, is what Silencio is trying to close.

What Is Silencio? Teaching AI How to Listen

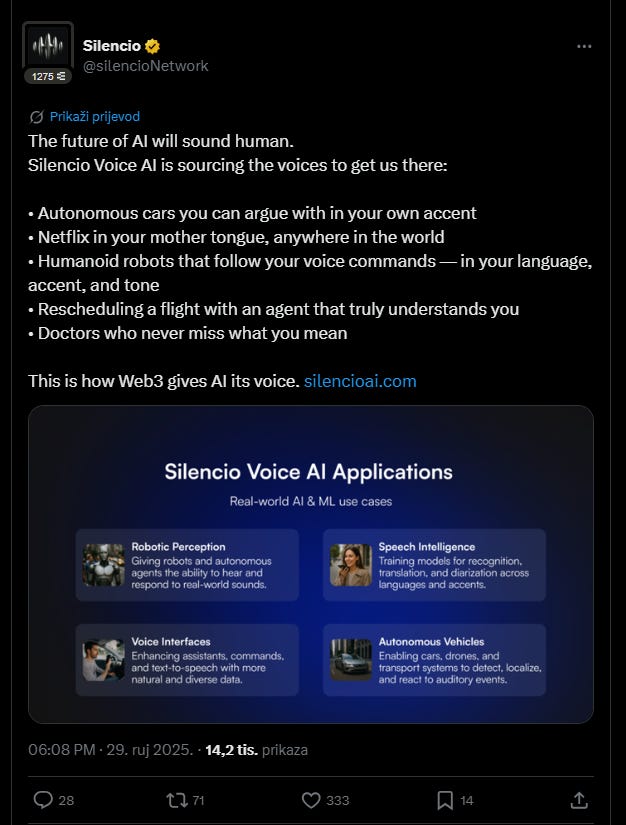

Silencio Network is teaching machines how to listen. It’s building a decentralized system that collects and shares real-world sound data, allowing robots, AI systems, and smart devices to hear the world as we do. Vision gives machines sight; Silencio gives them ears.

Silencio: The World’s Ears for AI & Robotics

Silencio is, in many ways, becoming the world’s ears for AI and robotics.

Most AI models today are limited by the narrow, centralized datasets they’re trained on: recordings from controlled settings and a few dominant languages. That’s why voice assistants often struggle with accents or tones outside the Western world.

In my country, we even have a name for it: “carabao English.” It’s our local way of speaking English, shaped by local/native rhythm and sound. It’s not wrong; it’s just different. But current AI models rarely understand it, because they’re trained on data that doesn’t include voices like ours. Silencio changes that by crowdsourcing diverse, real-world audio from people everywhere, capturing how the world actually sounds across different languages, accents, and environments.

There are over 7,000 languages and tens of thousands of dialects, yet less than 3% are represented in AI training today. Voice is the most natural interface we have, and Silencio’s building the hundreds of thousands of hours of speech data needed to change that. Demand is already here.

But Silencio’s purpose extends beyond training smarter AI. It’s also addressing one of the world’s most overlooked yet damaging environmental issues: noise pollution. Constant exposure to urban noise raises stress, disrupts sleep, and contributes to cardiovascular and cognitive illnesses. In Western Europe alone, it accounts for 1.6 million healthy life years lost each year.

Who wouldn’t get stressed trying to sleep at night while a neighbor belts out karaoke songs? Or booking an Airbnb for a peaceful weekend, only to be kept up by noisy tenants next door? Or maybe you just want a coffee shop to do important things at work, but you have no way of knowing what spots are actually quiet and not crowded in real time.

Traditional monitoring systems, dependent on sparse and costly sensors, produce incomplete and outdated data. Silencio’s decentralized approach changes that, creating a global, real-time sound map to inform policymakers, health experts, and city planners.

In other words, Silencio is giving AI and robots the ability to truly hear, and giving humans the data to build quieter, healthier cities. To understand how it works, we have to look at the layer it’s building beneath it all.

The Auditory Infrastructure of the Machine World

Artificial intelligence is moving beyond sight. Cameras and lidar allow machines to perceive shape and motion, but they can’t tell what those movements mean without sound. The next generation of multimodal AI must sense the world like humans do: through the fusion of vision, sound, and context.

Silencio fills that missing dimension. If cameras are the “eyes” of machines, Silencio is building the auditory infrastructure: a decentralized web of devices that capture and verify real-world audio data. This network forms the auditory backbone for autonomous systems, robotics, and smart cities, giving machines the ability to understand how things happen, not just what happens.

With this foundation, a robot could distinguish a calm voice from a shout for help. An autonomous car could detect sirens before they come into view. A city could monitor sound levels block by block to improve urban design. And AI assistants could interpret tone and emotion as naturally as humans do.

Unlike centralized systems owned by corporations, Silencio’s network is human-powered. Every smartphone becomes a sensor, transforming them into intelligent microphones. Its smartphone-native DePIN model (Decentralized Physical Infrastructure Network) converts ordinary phones into noise sensors, forming the world’s largest crowdsourced environmental data network.

The process is simple. The Silencio app, available on iOS and Android, uses a phone’s calibrated microphone to measure ambient noise: never recording conversations, only anonymized metrics like decibel levels, timestamps, and geolocation. Data is processed on-device to preserve privacy, then verified on-chain through the peaq Layer-1 network using Decentralized Device Identity (DID) for secure authentication.

Each data point contributes to a vast geospatial map divided into 650 million hexagonal tiles, covering nearly 44 billion square meters of surface area. The map reveals how sound changes across regions, pinpointing quiet streets, crowded markets, and everything in between.

Users are incentivized to participate. Each measurement, venue check-in, or voice sample earns in-app coins convertible to $SLC tokens, creating tangible value for contributing sound data. The network has already recorded 1300+ years of data from over 44,593 active devices, 13 million on-chain transactions, and over 100,000 daily data events, proving its technical maturity and scalability.

Building on this foundation, Silencio’s Voice AI program has rapidly expanded. With 22+ live campaigns, over 6,000 hours of recorded audio, and 500,000 uploads spanning 26 languages and accents, all captured through browser-based sessions with full user participation, the network is now one of the world’s fastest-growing sources of real-world, multilingual sound data. The team has closed two major enterprise contracts for Voice AI datasets and is running proof-of-concepts with five of the world’s largest AI companies, reflecting accelerating demand for diverse, context-rich training data.

New campaigns are launching regularly at their Silencio store, where anyone can join and refer friends to contribute. In select campaigns, Silencio is also one of the first DePINs to offer USD-denominated rewards, with participants earning the equivalent of $10/hour in $SLC tokens.

From enterprise applications, Silencio’s reach extends directly to consumers through Silencio SoundCheck, a Chrome extension that overlays real-time noise data on Airbnb, Google Maps, and Booking.com. The next step is even more ambitious: AI-driven noise reports capable of influencing real estate pricing and urban planning, turning verified, on-chain acoustic data into an actionable public resource.

The impact extends far beyond AI. Noise pollution costs society over $5 trillion annually in healthcare and productivity losses. Silencio’s data enables real-time insights that traditional monitoring can’t provide, allowing governments and researchers to act with precision. With 1.1 million users across 180 countries and 26 billion validated data points collected in 2024, Silencio has already become one of the most active DePIN networks in existence. Supported by $4.8 million in funding, it’s also positioning itself to capture part of the $300 billion personal data market through ethical, incentivized participation.

But what makes this infrastructure truly powerful isn’t just the technology, it’s what people and industries can do with it.

Why Sound Data Matters

Silencio’s data layer is already unlocking real-world value across multiple industries. By providing hyper-local, high-resolution acoustic insights on a global scale, it enables better decision-making in everything from city design to consumer choice.

City governments can identify noise hotspots and craft more effective zoning or traffic policies.

Real estate buyers and renters can evaluate a property’s true sound environment before committing, changing how people choose homes, restaurants, or even workplaces.

In hospitality, travelers can select hotels or Airbnbs based on verified quietness scores, improving comfort and rest.

Silencio has already turned these capabilities into accessible tools. Its SoundCheck Chrome extension overlays live noise ratings (A+ to F) on platforms like Airbnb, Booking.com, and Google Maps. Users can instantly see how quiet or loud a location is before they go; demonstrating the everyday application of decentralized data that empowers people to make informed, healthier choices.

From a broader AI perspective, Silencio’s dataset is fueling the next generation of voice-activated and context-aware systems. Its ambient sound libraries train AI models to detect anomalies, like sirens, horns, or mechanical faults, powering smarter infrastructure and safer cities. Silencio transforms sound into actionable intelligence for both humans and machines.

And beyond cities and consumers, a far bigger frontier is emerging: robotics. Because sound isn’t just shaping human decisions, it’s about to redefine how machines perceive the world.

The Big Opportunity: Robotics and Multimodal AI

Perhaps the most profound opportunity lies in robotics. Today’s robots, whether home assistants, drones, or autonomous vehicles, rely heavily on cameras and lidar. But real-world interaction demands more. As NVIDIA’s Jensen Huang put it, “everything that moves will be autonomous,” and autonomy requires perception. Vision sensors cover what’s visible; what’s missing is hearing.

Silencio provides that missing modality. Its vast corpus of labeled audio, from voice commands to environmental sounds, serves as the foundation for teaching machines to interpret and respond to the auditory world. Service robots could detect knocks, alarms, or glass breaking in noisy spaces. Autonomous cars could react to sirens before visual contact. In embodied AI, sound brings emotional and spatial context, identifying where a voice comes from or how it’s spoken.

The market signals align. The global voice AI and speech recognition industry is projected to surpass $100 billion by 2030, while environmental sound recognition is expected to soar from $0.35 billion in 2023 to $23 billion by 2032 (a 44% CAGR). Silencio’s growing dataset, gathered ethically and at scale, positions it as the auditory infrastructure for robotics and AI: the auditory equivalent of what vision data was for self-driving cars.

As Silencio’s whitepaper notes, “auditory intelligence is no longer optional, it’s foundational.”

Conclusion: Building the Audio Layer of the Future

We’re standing at the edge of the robotics revolution. While most of the world races to build faster hardware, sharper vision systems, and more precise location tools, one crucial piece of human perception is still missing: hearing. For machines to truly understand their surroundings, they can’t just see the world, they have to listen to it.

That’s where Silencio Network comes in. By turning ordinary smartphones and browsers into a global network of “ears,” Silencio is tackling two challenges at once: the growing crisis of noise pollution and the lack of diverse, anonymous, real-world audio data that modern AI and robotics need to evolve.

Behind the Scenes with Silencio: Our Journey and Vision

This isn’t just about comfort or convenience. Sound is context. It’s what helps a robot detect danger before it’s visible, a car recognize a siren before the light flashes, or an assistant understand emotion hidden in a voice. Silencio is building that missing auditory infrastructure for the physical world, giving machines not just functionality, but awareness.

The network already spans over 1.1 million users across 180 countries, with 26 billion validated data points; proof that a decentralized, people-powered model for sound data actually works. Every contributor with a smartphone helps map how the world really sounds, while earning rewards through an open, privacy-first system secured by blockchain.

As AI moves beyond screens and into streets, homes, and workplaces, sound data is becoming the backbone of multimodal intelligence. Silencio’s expanding ecosystem: from Voice AI campaigns to robotics partnerships and its upcoming data marketplace, positions it perfectly for the next wave of growth through 2030 and beyond.

In the end, Silencio isn’t just building quieter cities or smarter machines: it’s building understanding. It’s proving that technology can connect humans and AI in a more natural, ethical, and intelligent way.

Silencio is giving machines their ears; and in doing so, it’s helping the world finally listen.

As this new era of sensory intelligence unfolds, conversations around how machines think, act, and evolve are just beginning. That’s the spirit behind The Age of Intelligence: an invite-only gathering co-hosted with Crescendo, MomentumX Global, and Headline Entertainment. Join us on Wednesday, November 19 (6:00–10:00 PM GMT-3, Buenos Aires) as we explore the frontier where AI, robotics, and Web3 converge.

About Conglomerate

Conglomerate is a seasoned content writer and KOL in the crypto x AI x robotics space. Web3 gaming analyst, core contributor at The Core Loop, and pioneer of the onchain gaming hub and Crypto AI Resource Hub.

Book A Call

Curious how robotics, gaming, and AI can drive your next growth wave? Let’s talk. Book a call with Crescendo’s CEO Shash!