The World Is Becoming Self-Aware: Auki’s Vision for the Intelligent Physical World

The Limits of Digital Intelligence

It’s easy to be amazed by how powerful AI has become at solving digital problems. It can write essays, summarize research, generate art, and analyze information faster than any human ever could. Yet, strangely, the same systems that can decode quantum mechanics or paint lifelike portraits still struggle with things a toddler can do: like folding laundry, cooking a meal, or walking across the street without tripping.

Why can machines “think” so well, yet “act” so poorly?

This puzzle is known as Moravec’s Paradox, coined by roboticist Hans Moravec in the 1980s. It captures a strange truth: what feels effortless for humans is often the hardest for machines, and what’s hard for us can be surprisingly easy for them. We only got good at logic and reasoning in the last few thousand years, but movement, balance, and perception are skills our species refined over millions of years of evolution. That’s why AI can write a PhD thesis but still can’t reliably fold your shirt.

The Missing Senses of Machines

Humans understand the world through five senses: sight, hearing, touch, smell, and taste. Robots have their own “senses,” though they come in the form of protocols rather than biological organs. These are the systems that let machines perceive, communicate, and interact with their environment.

To make sense of all that information, robots rely on a layered architecture. The Link Layer handles physical connections like Wi-Fi or Ethernet. The Internet Layer routes information between devices. The Application Layer is what lets software actually use that information through web or file protocols.

But robots need more than communication. They need ways to exchange value and understand space.

The Trade Layer fills the first gap. It’s how machines can pay each other, own assets, or exchange digital value. Something our traditional banking systems can’t handle. That’s where blockchain comes in, giving robots a way to transact autonomously without human permission.

Then there’s the Spatial Layer, arguably the most important sense of all. This is the universal “map” that lets machines understand and reason about the physical world together. Just as humans have proprioception, our internal awareness of where our body is in space, robots need a shared spatial sense to act intelligently in the real world.

Right now, most digital devices have a very crude sense of space. They rely on flat screens, static GPS coordinates, and two-dimensional inputs. But spatial computing is changing that. Devices like Apple’s Vision Pro and Meta’s Ray-Ban smart glasses are early steps toward a world where computers perceive and interpret the physical world in real time.

This is the frontier that Auki is working on: how to give machines a shared understanding of space, movement, and context so that AI isn’t just smart in the cloud, but intelligent in the real world. Because intelligence without perception is blindness, and perception without space is confusion.

The Framework for Spatial Collaboration

The posemesh (also referred to as Auki Network) is Auki’s solution to the gap between digital intelligence and physical understanding. It’s a decentralized network that allows machines to perceive, compute, and reason about space together. Think of it as a shared nervous system for devices: a layer that lets robots, headsets, and sensors securely exchange what they see and know in real time.

Rather than acting alone, each machine becomes part of a larger, cooperative fabric of perception and computation. Within this framework, the PoseMesh creates the conditions for machines to collaborate, make sense of their surroundings, and even interact economically, all without relying on centralized control.

The first thing posemesh enables is shared perception and collaboration. Today, most devices operate in isolation: your phone’s camera, a delivery robot, and a drone might all “see” the same street but never share what they see. Each one spends time and energy trying to understand the same surroundings, even though they’re looking at the same world. posemesh changes this by allowing devices to securely exchange spatial data within local clusters, forming a kind of collective vision.

Imagine a warehouse where drones map the space from above, robots detect boxes and paths on the floor, and workers’ AR glasses identify which items need to move. Through posemesh, all these devices can merge what they see into a single, constantly updated map. Each participant benefits from the others’ perception: robots navigate more safely, drones plan smoother routes, and glasses provide real-time guidance. Everything happens locally, without sending sensitive data to the cloud or depending on a central server. posemesh turns individual sensors into a shared perception network, helping machines see the world not as separate observers, but as collaborators.

Beyond perception, posemesh also tackles the problem of computing limitations through distributed compute and resource efficiency. Devices can form temporary, decentralized clusters to share processing power, storage, and network capacity. This means smaller or low-power devices can offload heavy tasks like 3D mapping or image recognition to nearby peers instead of relying on distant cloud servers.

Picture wearing AR glasses at a concert. They’re lightweight and can’t handle complex visual processing on their own. Through posemesh, they can borrow extra compute power from nearby phones, cameras, or drones to analyze the scene and render effects instantly. It’s like forming a pop-up supercomputer made of local devices, then dissolving it once the task is complete: faster, more efficient, and far more private.

All of this comes together in posemesh’s open, incentivized data economy, which makes cooperation sustainable. The network runs on a trustless economic layer where every exchange of data or compute is verified, rewarded, or billed automatically. Devices that contribute useful work earn reputation and rewards, while others pay only for what they use.

For instance, a delivery robot could share its camera data with a mapping company updating a city’s 3D model. The robot earns tokens for its contribution, and the company pays for the specific data it needs. Over time, this forms a fair, self-sustaining marketplace where digital twins (virtual versions of real places) can be created, improved, and licensed without relying on big tech intermediaries.

How Auki Brings Spatial Intelligence to Life

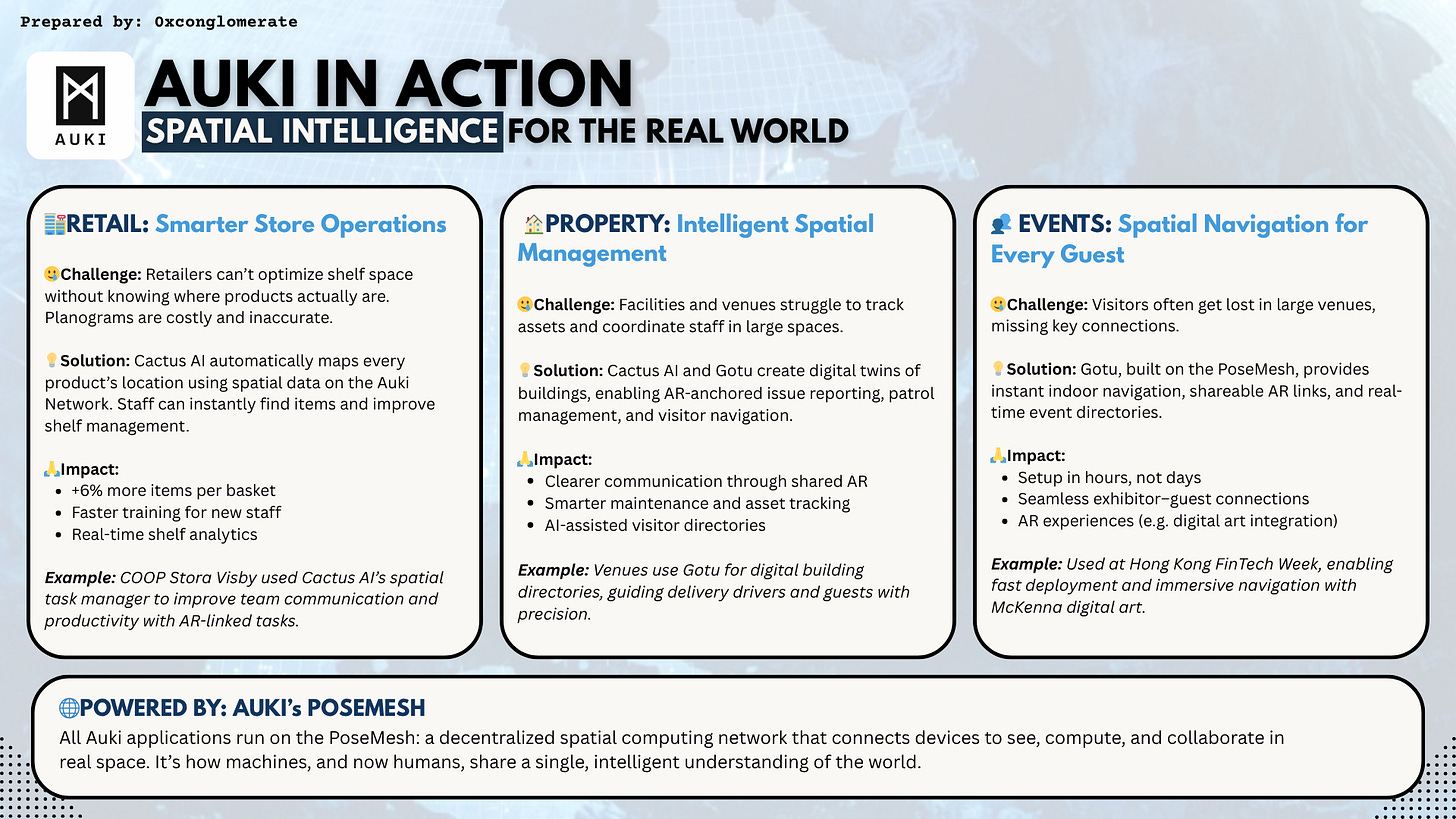

Across industries, Auki is already proving how the posemesh transforms physical environments into intelligent systems. Retailers use it to track products in real time, property managers to coordinate teams through shared AR overlays, and event organizers to guide thousands of visitors seamlessly through complex venues.

Each solution, whether in stores, buildings, or large-scale events, demonstrates the same principle: when devices share perception, compute, and context through the posemesh, space itself becomes an intelligent interface.

Beyond these use cases, Auki’s reach extends into a growing number of real-world deployments that reveal what spatial computing can achieve at scale.

In Hong Kong’s MTR Rethink Conference, visitors explored an interactive AR experience where digital objects blended seamlessly into the real world. At Tai O Heritage Hotel, the same technology brought local history to life, turning walls and corridors into interactive exhibits powered by McKenna, Auki’s spatial art platform.

In logistics, Toyota Material Handling demonstrated how the posemesh simplifies the creation of digital twins for smart warehouses. Workers can now visualize real-time layouts, assign routes to autonomous forklifts, and update tasks instantly; showing how spatial computing doesn’t just make environments smarter, it makes people more effective within them.

Auki has also partnered with Zapper, debuting at AWE, the world’s largest spatial computing expo. There, visitors navigated the venue using Auki’s assisted navigation: scan a QR code, open an instant “app clip” (no installation required), choose a destination, and receive precise turn-by-turn guidance indoors.

These examples point to something deeper. The same system that helps you find a booth at a conference can one day guide a visually impaired person through a crowded station, direct emergency responders through a complex hospital layout, or help travelers move confidently through unfamiliar airports. In malls and tourism hubs, where wayfinding can make or break a visit, Auki’s technology can quietly boost efficiency, accessibility, and sales simply by making space understandable.

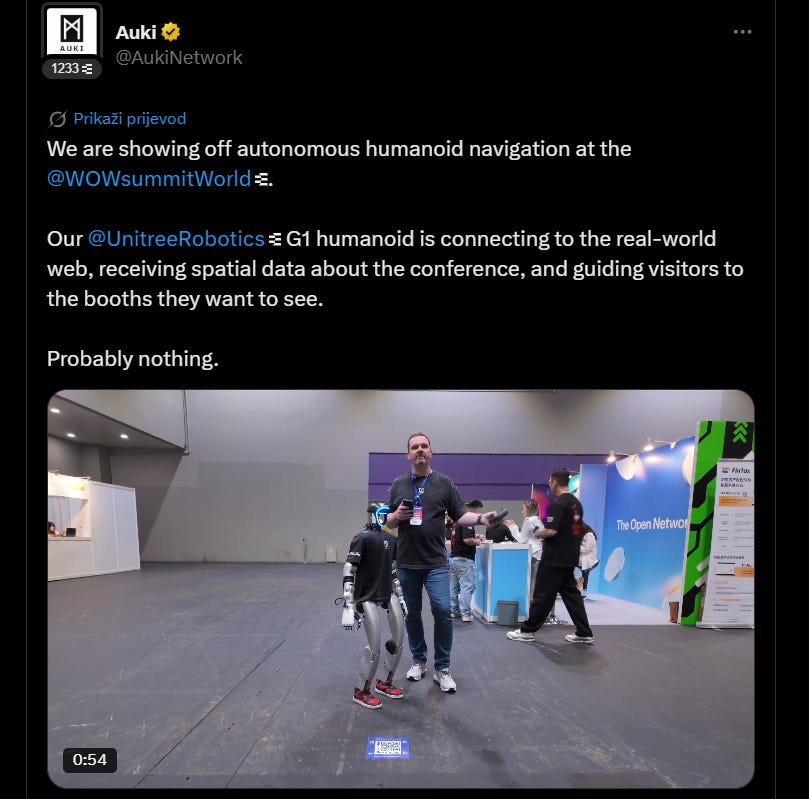

What Auki is doing for people, it’s also beginning to do for machines. Robots depend on spatial understanding to navigate, manipulate objects, and coordinate with one another. With the posemesh, this understanding becomes shared: robots can access real-time environmental data, offload compute to nearby devices, and even exchange sensory information to collectively interpret their surroundings.

Imagine a fleet of delivery robots in a city. Instead of each one separately mapping the same street, they cooperate: one captures the layout, another identifies obstacles, and a third calculates the best route—all through the Auki Network. This collective intelligence reduces duplication, saves compute power, and allows machines to operate with a level of context awareness that feels almost organic.

A Smarter Physical World

We often talk about AI as if it lives somewhere else: in the cloud, in our apps, or inside language models that process words instead of worlds. But the truth is, most of human life doesn’t happen online. Nearly 70% of the global economy is tied to physical locations, yet the AI revolution has barely touched the spaces where most real work happens.

Without intelligence that understands space, movement, and physics, AI remains abstract: brilliant at thinking, but blind to doing. This brings us back to Moravec’s Paradox, that strange imbalance between what’s easy for humans and what’s impossibly hard for machines. For decades, we’ve built AIs that could reason but not perceive, calculate but not comprehend, and process information without ever touching the world that produced it.

Auki’s work suggests that this paradox isn’t permanent. By giving machines a shared sense of space, the posemesh allows perception and reasoning: the two halves of intelligence, to finally meet. A robot navigating a warehouse, an AR headset guiding a worker, and a drone mapping a city all begin to operate from the same reality. The moment machines can see together, they start to understand together.

If Moravec was right that what comes easiest to us comes hardest to them, then Auki may be the first to reverse our relationship with technology. We stop adapting to machines, and they start adapting to us.

As Auki’s co-founder, Nils Pihl, put it:

“Once you make the physical world accessible to AI, AI helps make the physical world accessible to us.”

It’s a fitting way to think about what comes next. We built digital intelligence to help us understand our ideas; now, with Auki, we’re teaching it to understand our world.

About Conglomerate

Conglomerate is a seasoned content writer and KOL in the crypto x AI x robotics space. Web3 gaming analyst, core contributor at The Core Loop, and pioneer of the onchain gaming hub and Crypto AI Resource Hub.

Book A Call

Curious how robotics, gaming, and AI can drive your next growth wave? Let’s talk. Book a call with Crescendo’s CEO Shash!