Crypto-Robotics Funnel v2

In the first version of the Crypto Robotics Funnel, we tried to answer something basic but important:

Where is robotics actually moving forward, and where is it still stuck?

Robotics doesn’t progress all at once. Some parts leap ahead quickly, like sensors getting smarter, maps getting richer, and compute getting cheaper. Other parts move slowly because they depend on the real world: collecting data, building hardware, deploying fleets. The funnel helped lay all these layers out so we could see how uneven the progress really is.

It also showed something deeper: crypto is starting to act like the glue between these layers. It gives robots shared standards, shared incentives, and shared ways to coordinate, so the entire stack can evolve together instead of in isolated silos.

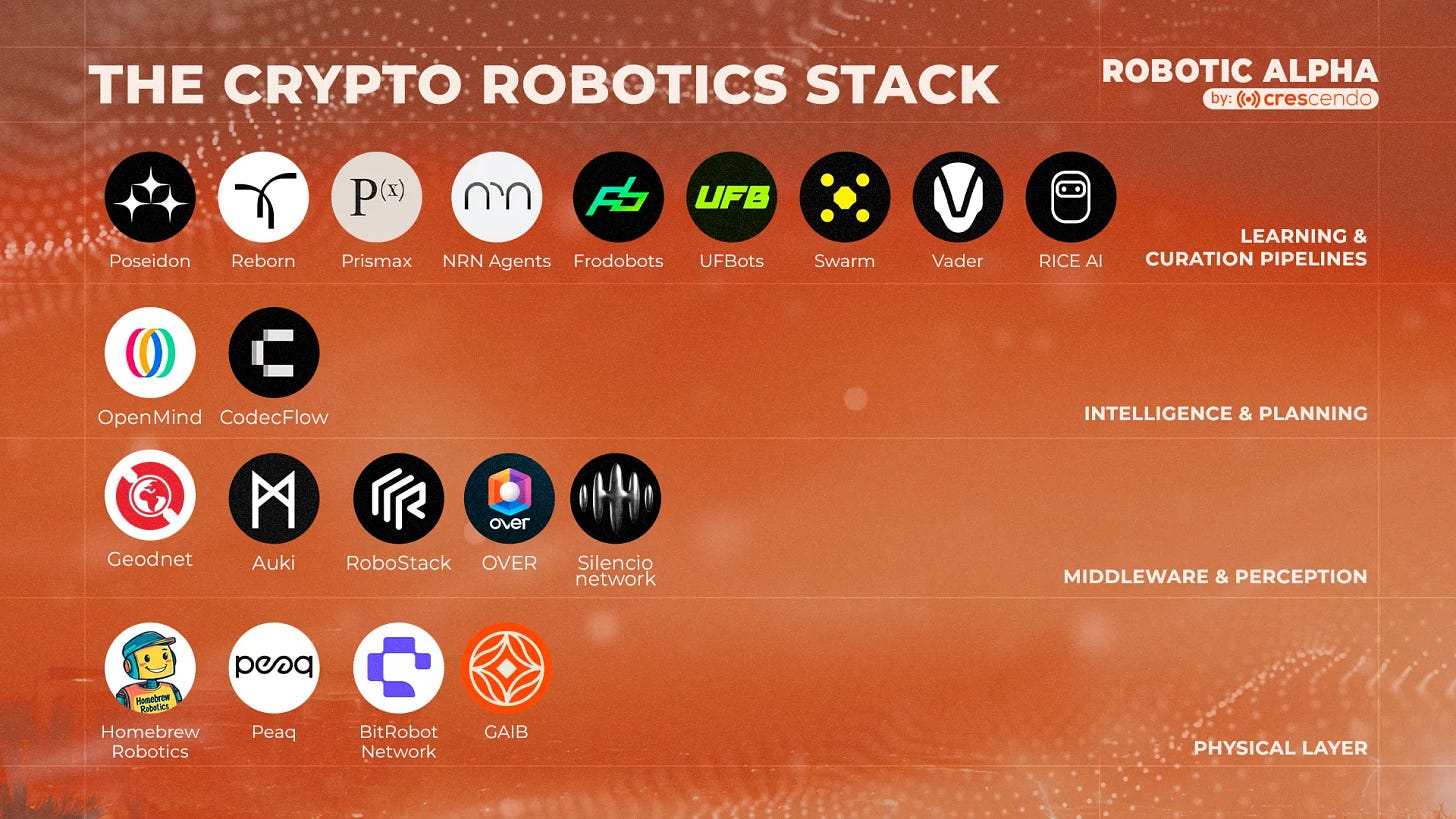

In this v2 update, nothing is being reinvented, only revealed more clearly. The funnel stays the same, but the world around it keeps evolving. As projects emerge, we’re gradually placing them into the categories we originally defined: Learning & Curation Pipelines (how robots get their experience), Intelligence & Planning (how robots learn to think and decide), Middleware & Perception (how robots understand and stay connected), and Physical Layer (where robots meet the real world).

Our new additions sharpen the picture. One of them teaches robots how humans really move. Another maps the world in 3D so machines know exactly where they stand. Another gives AI the sense of hearing it’s been missing. And one more tackles the hardest frontier of all: how robots and compute pay their own way so they can escape the lab and operate in the real world.

Each of these contributions points to a deeper pattern. Robotics isn’t one monolithic industry; it’s a stack of interdependent layers. Mapping projects to their proper layer helps us see where progress is accelerating, where it’s lagging, and where the next real breakthroughs are likely to surface.

That’s the purpose of v2: to sharpen the map. With the layers in place and the ecosystem expanding, the best way forward is to look at these new projects one by one and understand how each one pushes a different part of the stack forward.

Silencio: Giving Machines the Ability to Hear

Robots are getting better at seeing, mapping, and reasoning, but one human sense has been left behind almost entirely: hearing. That gap shows up everywhere: voice assistants breaking down with non-Western accents, robots unable to detect urgency in someone’s tone, AI that can see a crowded street but can’t hear the incoming motorcycle. The world is full of sound, yet most AI models still live in silence.

Silencio attacks this directly by building a decentralized network that captures sound the way humans actually experience it: messy, noisy, multilingual, and full of context. Instead of relying on studio-clean samples from a handful of dominant languages, Silencio collects real-world audio through simple browser sessions. Contributors record speech, accents, background noise, environmental sounds. Each clip is verified through decentralized checks, labeled by accent, emotion, distance, loudness level, and environment, then added to a massive open dataset.

This is crucial because today’s models barely scratch the surface of human linguistic diversity. There are more than 7,000 languages and tens of thousands of dialects on Earth, but less than 3% appear in AI training data. Silencio is rapidly closing that gap: 22+ live campaigns, 500,000+ uploads, 6,000 hours of audio, 26 languages and accents, and the momentum of one of the fastest-growing Voice AI datasets in the world. And unlike most DePINs, contributors earn USD-denominated rewards (~$10/hr).

But Silencio isn’t just about training robots to hear people. It’s also tackling something that affects humans daily: noise pollution. Urban noise contributes to stress, sleep problems, and even heart disease, costing Western Europe an estimated 1.6 million healthy life years lost per year. Traditional monitoring relies on expensive, sparsely deployed sensors, meaning cities often have no idea what their residents are actually experiencing. Silencio flips the model, turning everyday devices into a distributed acoustic sensor grid that builds a live “sound map” of the world. Cities, health agencies, and planners can finally get accurate data on where noise creates harm.

Cameras let machines see what’s happening, but sound explains how it’s happening. Vision gives robots shapes; audio gives them meaning: footsteps running vs. walking, a crash behind a door, a voice trembling with stress. Multimodal AI can’t reach its potential without this dimension, and Silencio is quietly building one of the most important datasets in physical AI.

Hearing gives robots context, but it doesn’t teach them how humans move. For that, the next layer in the stack steps in: a project that turns everyday human actions into the training fuel physical AI has been starving for.

Vader: Turning Human Motion Into Training Data

If v2 of the Crypto-Robotics Funnel is about mapping where each new project fits in the emerging “machine sense-making stack,” then Vader sits at the layer where everything truly begins: experience. Robots can’t reason their way into physical intelligence. They don’t learn to pour water or open a cabinet from a textbook, and they can’t copy motion from diagrams. To act in the world, they need the same thing humans needed growing up: thousands of small, imperfect, first-person examples of how people actually move.

Agents built for Web3 could think in code, but they couldn’t handle the physical messiness of the real world. Language models had the entire internet to learn from; vision models had billions of labeled images. Robotics had… staged lab demos. Clean, scripted, slow. Nothing like real life.

That’s the wall Vader saw coming, and the reason they pivoted from “AI in code” to “AI in flesh.”

Their insight was deceptively simple: robots need to see the world the way humans see it, from our eyes, doing our tasks, in our environments.

EgoPlay, Vader’s flagship system, turns this idea into a global data engine. Instead of expensive motion-capture studios or research labs, Vader uses everyday devices: smartphones and smart glasses, to collect uncut, first-person videos of ordinary human behavior. Pouring a drink, wiping a table, folding a shirt, turning a doorknob, flipping a switch. Each action becomes a “micro-quest,” and every submission passes through a verification pipeline that checks clarity, correctness, and task fidelity.

Contributors earn Vader Points (VP), convertible into $VADER tokens or in-app rewards. Their Version 2 goes further, layering on skill trees, challenges, and leaderboards; turning physical data collection into a real-world multiplayer game. They call it a Physical MMO: every movement you record becomes training fuel for future humanoids.

There’s a deeper philosophy behind this. Vader isn’t imagining a future where robots replace human labor wholesale. They’re targeting the physical work most people would rather not do: repetitive, tiring, or dangerous tasks. Just as AI accelerated mental work without removing human creativity, physical AI can relieve the burdens of motion without stealing agency. But none of that can happen until robots learn how humans really behave, not in labs, but in life.

That’s what Vader is building: a scalable, reward-driven, global pipeline of human motion that teaches machines the rhythm of physical reality.

But even the best motion data isn’t enough. A robot that knows how to move still needs to know where it is. That requires a spatial layer: a shared map of the world, which is exactly where the next project takes us.

Over the Reality: Building the World’s Decentralized 3D Map

Robots can’t do much with motion data alone, they also need a stable sense of where they are. That matters, because every robot needs a stable “spatial memory” before it can act safely around people.

Over the Reality is building something GPS never tried to be: a precise, community-built 3D model of the physical world. Instead of sending out expensive mapping trucks, OVER lets ordinary people scan real locations using their phones or 360° cameras through Map2Earn. In return, they earn $OVR tokens. Those scans, more than 72 million images across 150,000+ mapped locations, are stitched into OVRMaps, one of the largest decentralized 3D mapping datasets anywhere.

Once the maps exist, devices can use Over’s VPS (Visual Positioning System) to locate themselves with centimeter-level precision simply by looking through their camera.

But Over the Reality is not just about the maps, there’s several things built on top of them. OVRLand NFTs act as spatial publishing rights: 300 m² digital parcels tied to real coordinates. Creators use OVER’s full XR toolchain (AI 3D asset generator, Web Builder, Live Editor, Unity SDK), to pin experiences to specific places. More than 880,000 OVRLands have been sold and 23,000+ AR experiences published, and the whole pipeline now works across phones, browsers, and smart glasses. Over the Reality essentially gives physical space a programmable layer.

But the dataset has outgrown AR. With its 100,000+ ultra-detailed 3D maps, Over the Reality has become a foundation for Physical AI: training Vision Foundation Models that can understand depth, reconstruct scenes, and perceive space. These models support everything from robotics navigation to 3D design tools to next-gen architecture workflows. AI companies are already licensing datasets directly from Over, and the team is training their own Large Geospatial Model (LGM) to push machine perception even further.

When you zoom out, the pattern is clear: Over the Reality started as a decentralized AR world and evolved into the spatial backbone for the machine economy. It turns everyday cameras into a world-scale 3D scanner, gives machines a way to locate themselves precisely, and provides the spatial intelligence robots will depend on as they move into our cities, workplaces, and homes.

If Over the Reality gives robots spatial awareness, and Silencio gives them hearing, and Vader gives them human motion, there’s still one question left: how do these machines survive economically outside the lab? That takes us to the part of the stack where infrastructure meets finance, and where GAIB enters the picture.

GAIB: The Economic Engine Behind AI & Robotics

GAIB sits at the part most people overlook: the layer that makes robots economically possible at all. Every robot deployed in a mall, factory, office, or home stands on top of a silent mountain of infrastructure: GPUs powering model inference, data centers running training loops, RaaS fleets needing maintenance and energy. And just like humans, embodied AI can’t exist in the real world without a steady way to pay its bills.

This is where robotics has quietly hit its biggest bottleneck. Training and running physical AI is expensive; deploying robots at scale is even more so. A single NVIDIA H200 can cost as much as a small car. Humanoid fleets require continuous compute. Even lightweight service robots need ongoing financing for batteries, repairs, connectivity, and model updates. Traditional financing doesn’t understand this category well, and centralized operators can’t expand fast enough to meet demand.

GAIB reframes robotics and AI infrastructure as a new financial asset class, treating GPUs, robot fleets, and AI energy systems as onchain, yield-bearing primitives. Instead of sitting as illiquid hardware in a warehouse, these assets become tradable, composable units inside DeFi. Their synthetic dollar, AID, anchors the system. Stake that AID and you get sAID, a receipt token backed not by token emissions but by real cash flows: compute leases, Robotics-as-a-Service revenue, and structured AI infra deals. The result is a stable, transparent pipeline of yield that currently targets around 14.4% APY from actual robotics and GPU operations.

For the robotics ecosystem, this unlocks something critical.

Data centers and neo-cloud operators get a new funding rail to scale GPU racks without long bank approvals.

Robot OEMs and fleet operators gain a path to finance deployments, turning long-term RaaS contracts into immediate liquidity.

And developers building Physical AI, including projects like Vader, RICE, and OVER, benefit from a more abundant, decentralized supply of compute that isn’t limited to a handful of tech giants.

This shifts robotics from a slow, capex-heavy industry to one that looks more like a financialized network: fast-moving, globally capitalized, and modular. Just as DePIN brought decentralized sensing and mapping to the edges, GAIB brings decentralized funding to the core.

GAIB is the part of the funnel that keeps everything else running. Vader and RICE generate experience, Silencio and OVER give robots perception, but none of those layers can scale into millions of devices without a sustainable economic backbone. GAIB answers the question every robotics team avoids but eventually confronts: how do we pay for the compute, energy, and hardware needed for embodied AI to exist at scale?

With compute and infrastructure funded, the last piece of the funnel is experience: the day-to-day interactions robots need to grow smarter over time. That’s where RICE steps in.

RICE: A DePIN Network for Real-World Robotics Experience

If Vader teaches robots how humans move, RICE AI teaches robots how the world responds. In the funnel, it sits in the same learning layer but approaches the problem from the opposite angle: instead of humans recording tasks, RICE sends actual robots into real environments and turns their day-to-day interactions into training data. Because no matter how good a simulation or staged demo is, nothing replaces what robots learn from being out in the world: dodging people in a hallway, navigating a store aisle, or delivering items across an office floor.

RICE AI comes from Rice Robotics, a team with more than 10,000 deployed robots across SoftBank HQ, Mitsui Fudosan, and 7-Eleven Japan. They’ve seen firsthand why robotics progress is slow: only a handful of corporations collect the kind of rich, multimodal experience you need to train Robotics Foundation Models (RFMs). Everyone else works in the dark. RICE stands out by building a DePIN-style network where robot fleets stream vision, audio, motion, and environmental signals into a shared pool, each feed verified on-chain, each contributor rewarded in $RICE based on quality and usefulness.

The system has three pillars. First, the DePIN network itself, where robots act as roaming sensors and every route or task becomes training fuel. Second, the AI Foundry: a platform for training RFMs that focus on physical skills like navigation, manipulation, and real-time reaction, mixing real-world data with synthetic and neuro-symbolic methods. Third, the hardware gateway: the Minibot M1, launched with FLOKI, which works as a home assistant on the surface but also as a compact data node in the network. Every interaction, whether you’re asking it questions, having it move around, or letting it be teleoperated, contributes to the dataset and earns rewards.

All of this feeds into a marketplace where robotics data and RFMs can be bought or licensed, giving the network a genuine economic loop: real robots generate real experience that trains real models. RICE’s bet is simple but important for the funnel: if humanoids are going to reach AGI-level physical intelligence, the world can’t rely on a few companies hoarding experience. The data backbone has to be open, shared, and owned by the people and communities generating it.

With RICE, the learning layer of the funnel becomes complete: robots now have both the human perspective from Vader and the environmental perspective from their own real-world deployments.

Closing Thoughts

With these five projects added, the funnel feels less like a diagram and more like a functioning ecosystem. Each one sits naturally inside its layer, and once you lay them side by side, the structure of modern robotics becomes easier to see.

First is Learning & Curation, where Vader and RICE belongs. They tackle the same bottleneck from two ends: Vader shows robots how humans move, while RICE shows them how the world behaves when robots enter real environments. Together, they give embodied AI something it has always lacked: continuous, varied, first-person experience.

Next is Middleware & Perception, which now includes OVER and Silencio. These two fill in the senses machines were missing. OVER gives robots spatial memory through world-scale 3D maps and camera-based positioning; Silencio gives them auditory context through real-world sound. One handles “where,” the other handles “what you can’t see.” Their presence in this layer shows how perception is becoming multimodal instead of vision-only.

Then there’s the Physical Layer, where we placed GAIB in the funnel. This is the part of the stack that deals with the economic reality behind all these ideas. Training, sensing, mapping, and deploying robots depend on compute, energy, and hardware, and GAIB turns those costs into a financial system that can scale. For now it fits inside the physical layer, but it may eventually deserve its own category; it is, in many ways, the financial plumbing that lets the rest of the stack leave the lab.

Here in v2, there are still no new entries in the Intelligence & Planning layer, the “mind” of the robot. Part of that is simply because this update focused on a small, curated set of five projects. But it also mirrors what we see across the space: true OS-level intelligence systems are fewer, slower-moving, and emerge later in the maturity curve.

And that timing makes sense. Intelligence can only grow once the foundations beneath it are stable. A robot can’t form reliable plans until it can trust what it sees, hear, and learns. The layers shaped by Vader and RICE (experience), Silencio and OVER (perception), and GAIB (infrastructure) need to settle before higher reasoning can take shape. You can’t build a brain before you build the senses, the body, and the environment that brain has to operate in.

As those foundations strengthen: richer data pipelines, denser maps, broader sensory inputs, and sustainable infrastructure, the intelligence layer will naturally emerge. Not forced, not hypothetical, but built on top of a world robots can finally understand. v2 of the funnel shows that the base layers are starting to click together; what comes next is the kind of reasoning that can only grow once a machine has something real to think about.

This is why robotics often gets blamed for “not being ready” when the reality is that all the parts robots depend on: sensors, data networks, identity systems, hardware, mapping, payments, aren’t growing at the same speed. When one layer moves fast but another lags, the whole system looks slower than it really is. Crescendo’s funnel lays this out clearly by showing which layers are already mature and which ones still need to catch up.

Five projects might seem like a small addition, but they make the whole stack feel more alive. Each one nudges robotics forward in places that used to feel out of reach, and crypto just happens to be the tool that lets those gaps open up. As the ecosystem grows, the funnel will grow with it: shifting, stretching, and reshaping as new parts of the puzzle fall into place.

More to map, more to understand. Until the next iteration of the Crypto-Robotics Funnel.

About Conglomerate

Conglomerate is a seasoned content writer and KOL in the crypto x AI x robotics space. Web3 gaming analyst, core contributor at The Core Loop, and pioneer of the onchain gaming hub and Crypto AI Resource Hub.

Book A Call

Curious how robotics, gaming, and AI can drive your next growth wave? Let’s talk. Book a call with Crescendo’s CEO Shash!